publications

2025

-

Emilia Magnani, Marvin Pförtner, Tobias Weber, and Philipp HennigIn Proceedings of the 42nd International Conference on Machine Learning (ICML), 2025

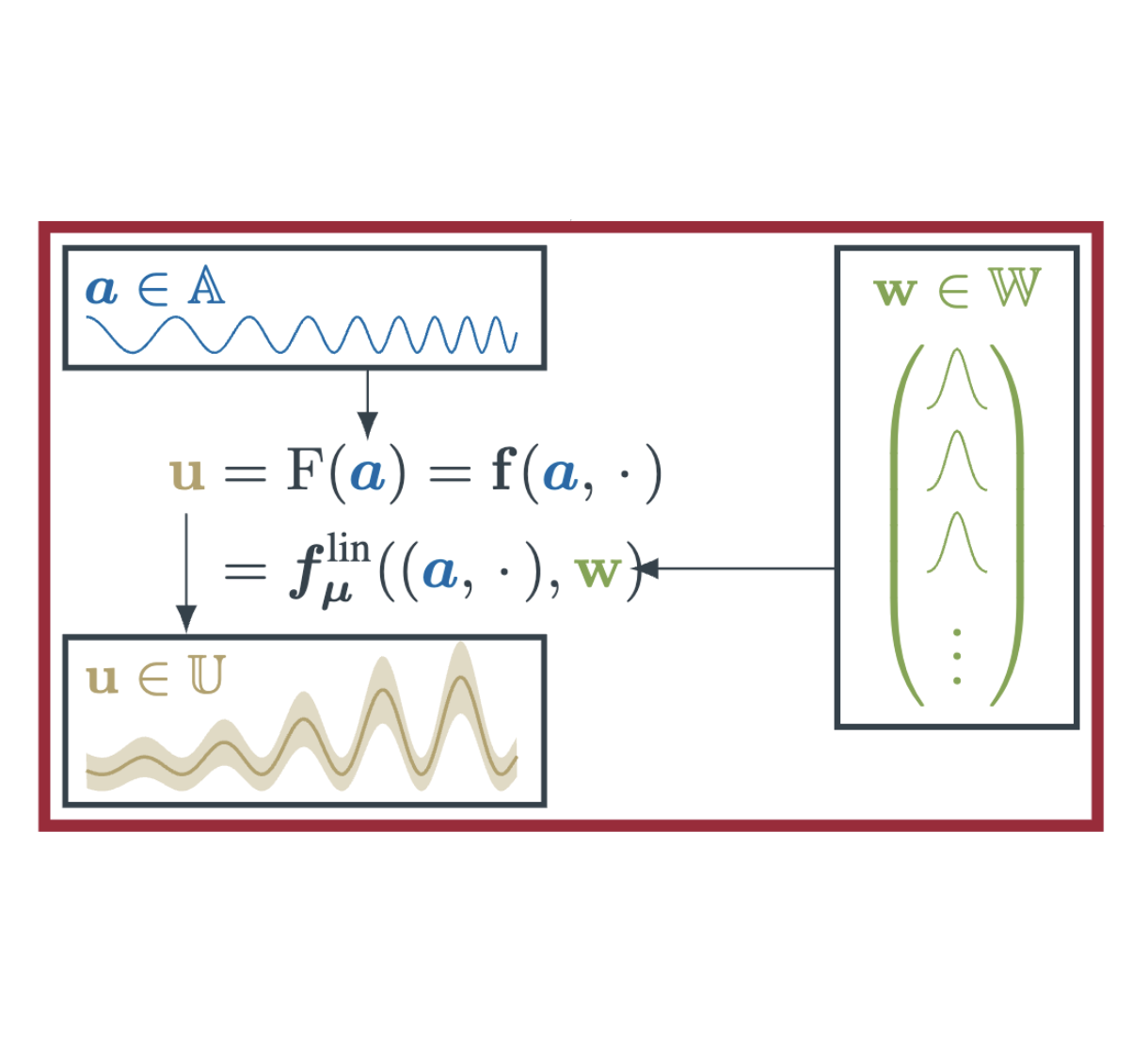

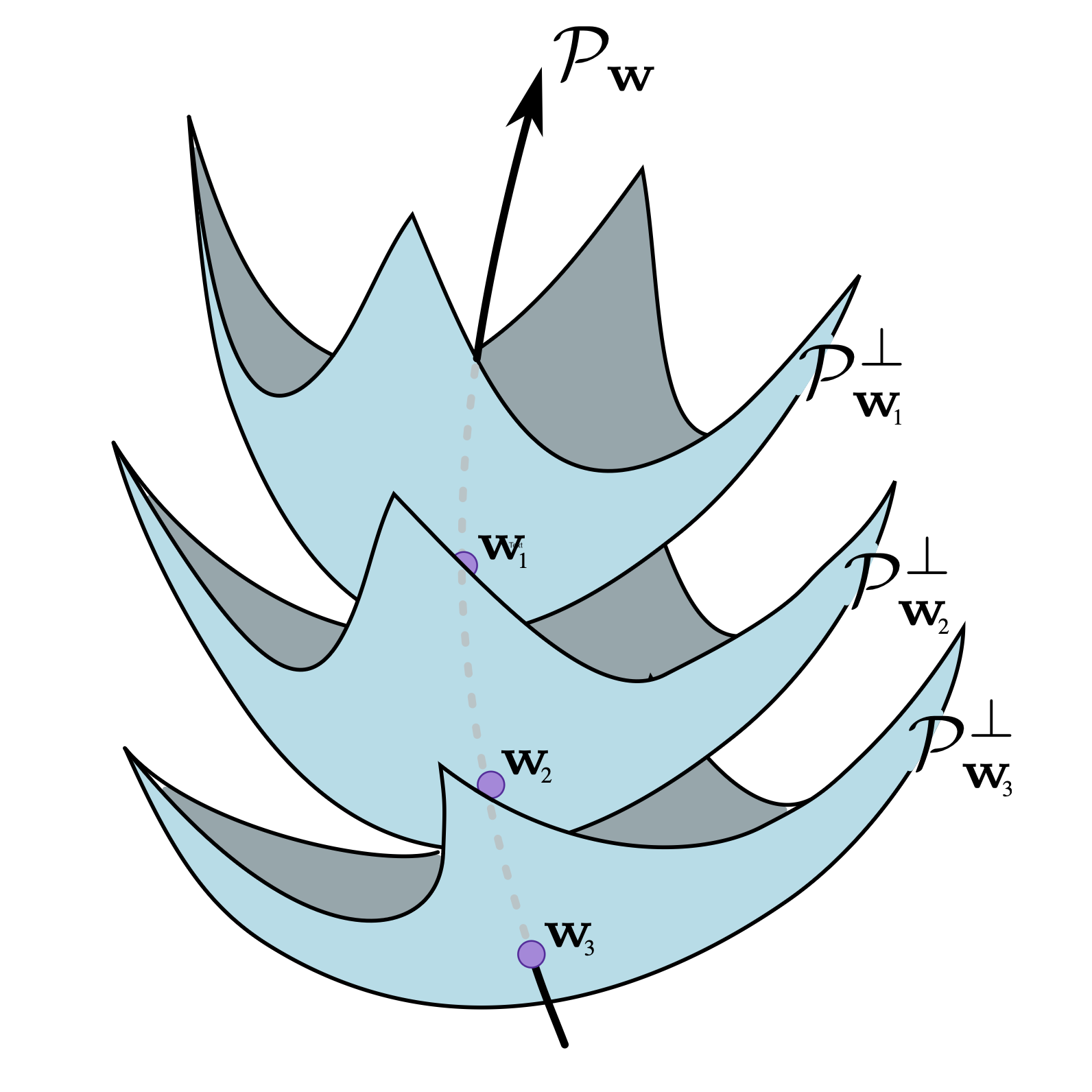

Emilia Magnani, Marvin Pförtner, Tobias Weber, and Philipp HennigIn Proceedings of the 42nd International Conference on Machine Learning (ICML), 2025Neural operators generalize neural networks to learn mappings between function spaces from data. They are commonly used to learn solution operators of parametric partial differential equations (PDEs) or propagators of time-dependent PDEs. However, to make them useful in high-stakes simulation scenarios, their inherent predictive error must be quantified reliably. We introduce LUNO, a novel framework for approximate Bayesian uncertainty quantification in trained neural operators. Our approach leverages model linearization to push (Gaussian) weight-space uncertainty forward to the neural operator’s predictions. We show that this can be interpreted as a probabilistic version of the concept of currying from functional programming, yielding a function-valued (Gaussian) random process belief. Our framework provides a practical yet theoretically sound way to apply existing Bayesian deep learning methods such as the linearized Laplace approximation to neural operators. Just as the underlying neural operator, our approach is resolution-agnostic by design. The method adds minimal prediction overhead, can be applied post-hoc without retraining the network, and scales to large models and datasets. We evaluate these aspects in a case study on Fourier neural operators.

@inproceedings{Magnani2025LUNO, author = {Magnani, Emilia and Pf\"ortner, Marvin and Weber, Tobias and Hennig, Philipp}, title = {Linearization Turns Neural Operators into Function-Valued {Gaussian} Processes}, booktitle = {Proceedings of the 42nd International Conference on Machine Learning (ICML)}, volume = {267}, series = {Proceedings of Machine Learning Research}, publisher = {PMLR}, address = {Vancouver, Canada}, year = {2025}, archiveprefix = {arXiv}, eprint = {2406.05072}, primaryclass = {cs.LG}, doi = {10.48550/arxiv.2406.05072}, url = {https://arxiv.org/abs/2406.05072}, } -

Tim Weiland, Marvin Pförtner, and Philipp HennigIn Proceedings of the 28th International Conference on Artificial Intelligence and Statistics (AISTATS), 2025

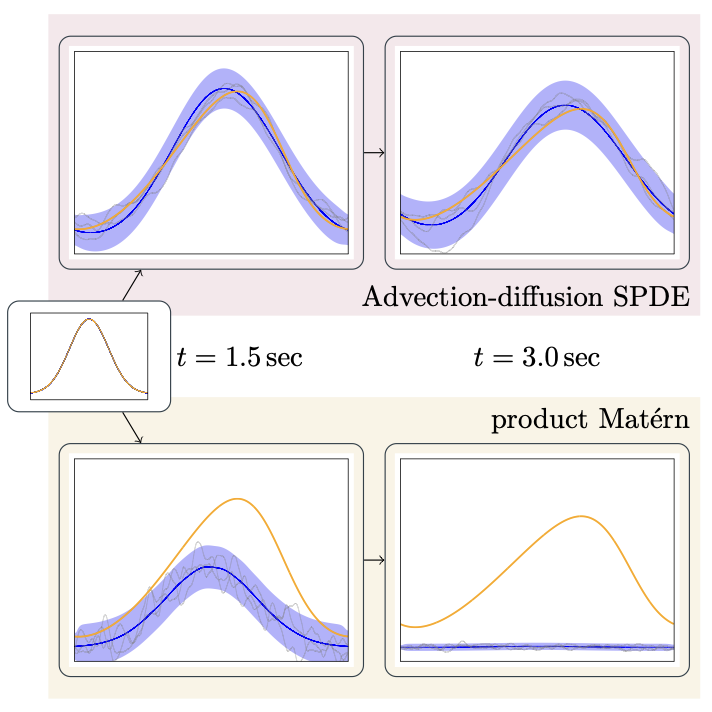

Tim Weiland, Marvin Pförtner, and Philipp HennigIn Proceedings of the 28th International Conference on Artificial Intelligence and Statistics (AISTATS), 2025Mechanistic knowledge about the physical world is virtually always expressed via partial differential equations (PDEs). Recently, there has been a surge of interest in probabilistic PDE solvers – Bayesian statistical models mostly based on Gaussian process (GP) priors which seamlessly combine empirical measurements and mechanistic knowledge. As such, they quantify uncertainties arising from e.g. noisy or missing data, unknown PDE parameters or discretization error by design. Prior work has established connections to classical PDE solvers and provided solid theoretical guarantees. However, scaling such methods to large-scale problems remains a fundamental challenge primarily due to dense covariance matrices. Our approach addresses the scalability issues by leveraging the Markov property of many commonly used GP priors. It has been shown that such priors are solutions to stochastic PDEs (SPDEs) which when discretized allow for highly efficient GP regression through sparse linear algebra. In this work, we show how to leverage this prior class to make probabilistic PDE solvers practical, even for large-scale nonlinear PDEs, through greatly accelerated inference mechanisms. Additionally, our approach also allows for flexible and physically meaningful priors beyond what can be modeled with covariance functions. Experiments confirm substantial speedups and accelerated convergence of our physics-informed priors in nonlinear settings.

@inproceedings{Weiland2025GMRFPDESolvers, author = {Weiland, Tim and Pf\"ortner, Marvin and Hennig, Philipp}, title = {Flexible and Efficient Probabilistic {PDE} Solvers through {Gaussian} {Markov} Random Fields}, editor = {Li, Yingzhen and Mandt, Stephan and Agrawal, Shipra and Khan, Emtiyaz}, booktitle = {Proceedings of the 28th International Conference on Artificial Intelligence and Statistics (AISTATS)}, volume = {258}, series = {Proceedings of Machine Learning Research}, pages = {2746--2754}, publisher = {PMLR}, address = {Mai Khao, Thailand}, year = {2025}, archiveprefix = {arXiv}, eprint = {2503.08343}, primaryclass = {cs.LG}, doi = {10.48550/arXiv.2503.08343}, url = {https://proceedings.mlr.press/v258/weiland25a.html}, } -

In Proceedings of the 28th International Conference on Artificial Intelligence and Statistics (AISTATS), 2025

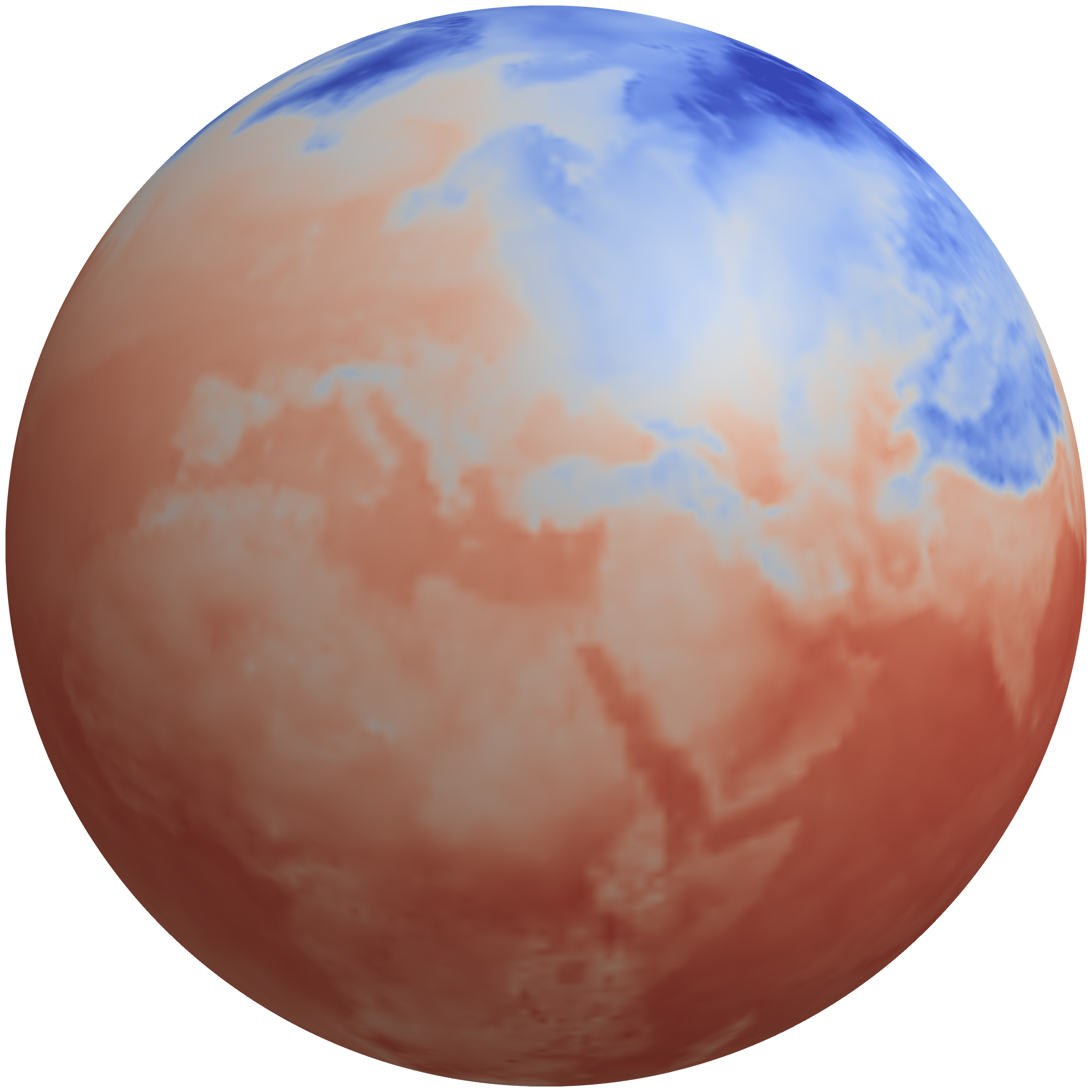

In Proceedings of the 28th International Conference on Artificial Intelligence and Statistics (AISTATS), 2025Kalman filtering and smoothing are the foundational mechanisms for efficient inference in Gauss-Markov models. However, their time and memory complexities scale prohibitively with the size of the state space. This is particularly problematic in spatiotemporal regression problems, where the state dimension scales with the number of spatial observations. Existing approximate frameworks leverage low-rank approximations of the covariance matrix. Since they do not model the error introduced by the computational approximation, their predictive uncertainty estimates can be overly optimistic. In this work, we propose a probabilistic numerical method for inference in high-dimensional Gauss-Markov models which mitigates these scaling issues. Our matrix-free iterative algorithm leverages GPU acceleration and crucially enables a tunable trade-off between computational cost and predictive uncertainty. Finally, we demonstrate the scalability of our method on a large-scale climate dataset.

@inproceedings{Pfoertner2025CAKF, author = {Pf\"ortner, Marvin and Wenger, Jonathan and Cockayne, Jon and Hennig, Philipp}, title = {Computation-Aware {Kalman} Filtering and Smoothing}, editor = {Li, Yingzhen and Mandt, Stephan and Agrawal, Shipra and Khan, Emtiyaz}, booktitle = {Proceedings of the 28th International Conference on Artificial Intelligence and Statistics (AISTATS)}, volume = {258}, series = {Proceedings of Machine Learning Research}, pages = {2071--2079}, publisher = {PMLR}, address = {Mai Khao, Thailand}, year = {2025}, archiveprefix = {arXiv}, eprint = {2405.08971}, primaryclass = {cs.LG}, doi = {10.48550/arxiv.2405.08971}, url = {https://proceedings.mlr.press/v258/pfortner25a.html}, }

2024

-

In Advances in Neural Information Processing Systems, 2024

In Advances in Neural Information Processing Systems, 2024Laplace approximations are popular techniques for endowing deep networks with epistemic uncertainty estimates as they can be applied without altering the predictions of the trained network, and they scale to large models and datasets. While the choice of prior strongly affects the resulting posterior distribution, computational tractability and lack of interpretability of the weight space typically limit the Laplace approximation to isotropic Gaussian priors, which are known to cause pathological behavior as depth increases. As a remedy, we directly place a prior on function space. More precisely, since Lebesgue densities do not exist on infinite-dimensional function spaces, we recast training as finding the so-called weak mode of the posterior measure under a Gaussian process (GP) prior restricted to the space of functions representable by the neural network. Through the GP prior, one can express structured and interpretable inductive biases, such as regularity or periodicity, directly in function space, while still exploiting the implicit inductive biases that allow deep networks to generalize. After model linearization, the training objective induces a negative log-posterior density to which we apply a Laplace approximation, leveraging highly scalable methods from matrix-free linear algebra. Our method provides improved results where prior knowledge is abundant (as is the case in many scientific inference tasks). At the same time, it stays competitive for black-box supervised learning problems, where neural networks typically excel.

@inproceedings{Cinquin2024FSPLaplace, author = {Cinquin, Tristan and Pf\"ortner, Marvin and Fortuin, Vincent and Hennig, Philipp and Bamler, Robert}, title = {{FSP-Laplace}: Function-Space Priors for the {Laplace} Approximation in {Bayesian} Deep Learning}, editor = {Globerson, A. and Mackey, L. and Belgrave, D. and Fan, A. and Paquet, U. and Tomczak, J. and Zhang, C.}, booktitle = {Advances in Neural Information Processing Systems}, volume = {37}, pages = {13897--13926}, publisher = {Curran Associates, Inc.}, year = {2024}, archiveprefix = {arXiv}, eprint = {2407.13711}, primaryclass = {cs.LG}, doi = {10.48550/arxiv.2407.13711}, url = {https://papers.neurips.cc/paper_files/paper/2024/hash/19774ce2d4b0d17a3a8aea26ad99fe8a-Abstract-Conference.html}, } -

Hrittik Roy, Marco Miani, Carl Henrik Ek, Philipp Hennig, Marvin Pförtner, Lukas Tatzel, and Søren HaubergIn Advances in Neural Information Processing Systems, 2024

Hrittik Roy, Marco Miani, Carl Henrik Ek, Philipp Hennig, Marvin Pförtner, Lukas Tatzel, and Søren HaubergIn Advances in Neural Information Processing Systems, 2024Current approximate posteriors in Bayesian neural networks (BNNs) exhibit a crucial limitation: they fail to maintain invariance under reparameterization, i.e. BNNs assign different posterior densities to different parametrizations of identical functions. This creates a fundamental flaw in the application of Bayesian principles as it breaks the correspondence between uncertainty over the parameters with uncertainty over the parametrized function. In this paper, we investigate this issue in the context of the increasingly popular linearized Laplace approximation. Specifically, it has been observed that linearized predictives alleviate the common underfitting problems of the Laplace approximation. We develop a new geometric view of reparametrizations from which we explain the success of linearization. Moreover, we demonstrate that these reparameterization invariance properties can be extended to the original neural network predictive using a Riemannian diffusion process giving a straightforward algorithm for approximate posterior sampling, which empirically improves posterior fit.

@inproceedings{Roy2024ReparameterizationBNN, author = {Roy, Hrittik and Miani, Marco and Ek, Carl Henrik and Hennig, Philipp and Pf\"ortner, Marvin and Tatzel, Lukas and Hauberg, S{\o}ren}, title = {Reparameterization invariance in approximate {Bayesian} inference}, editor = {Globerson, A. and Mackey, L. and Belgrave, D. and Fan, A. and Paquet, U. and Tomczak, J. and Zhang, C.}, booktitle = {Advances in Neural Information Processing Systems}, volume = {37}, pages = {8132--8164}, publisher = {Curran Associates, Inc.}, year = {2024}, archiveprefix = {arXiv}, eprint = {2406.03334}, primaryclass = {cs.LG}, doi = {10.48550/arxiv.2406.03334}, url = {https://papers.neurips.cc/paper_files/paper/2024/hash/0f934dd2030f5740cde0aa2697a105a9-Abstract-Conference.html}, } -

Tim Weiland, Marvin Pförtner, and Philipp Hennig2024

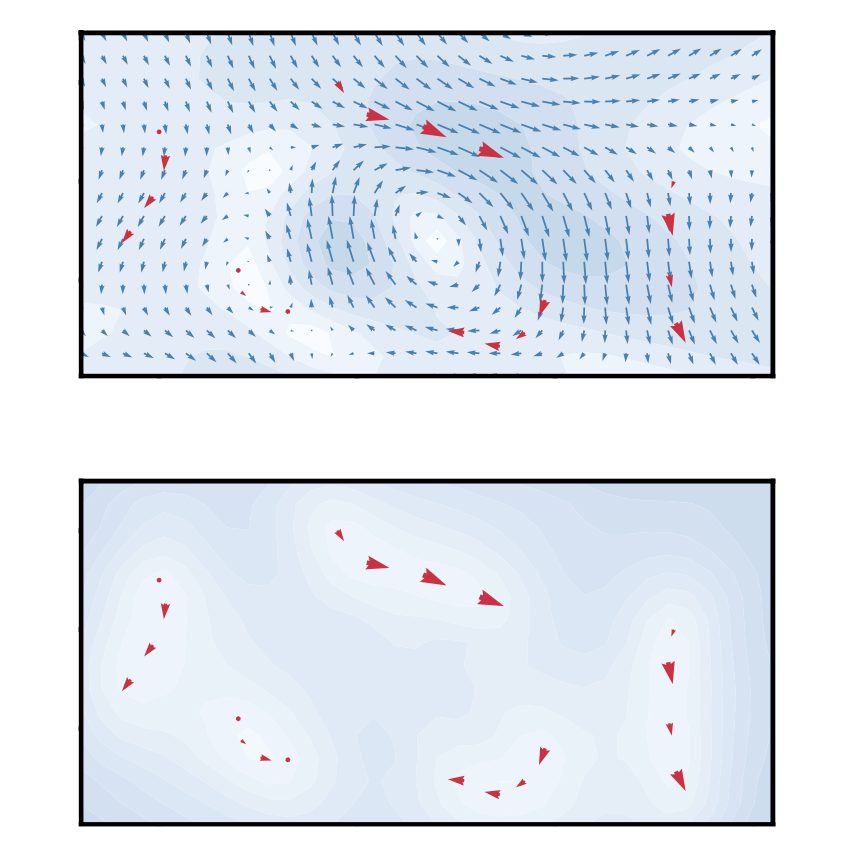

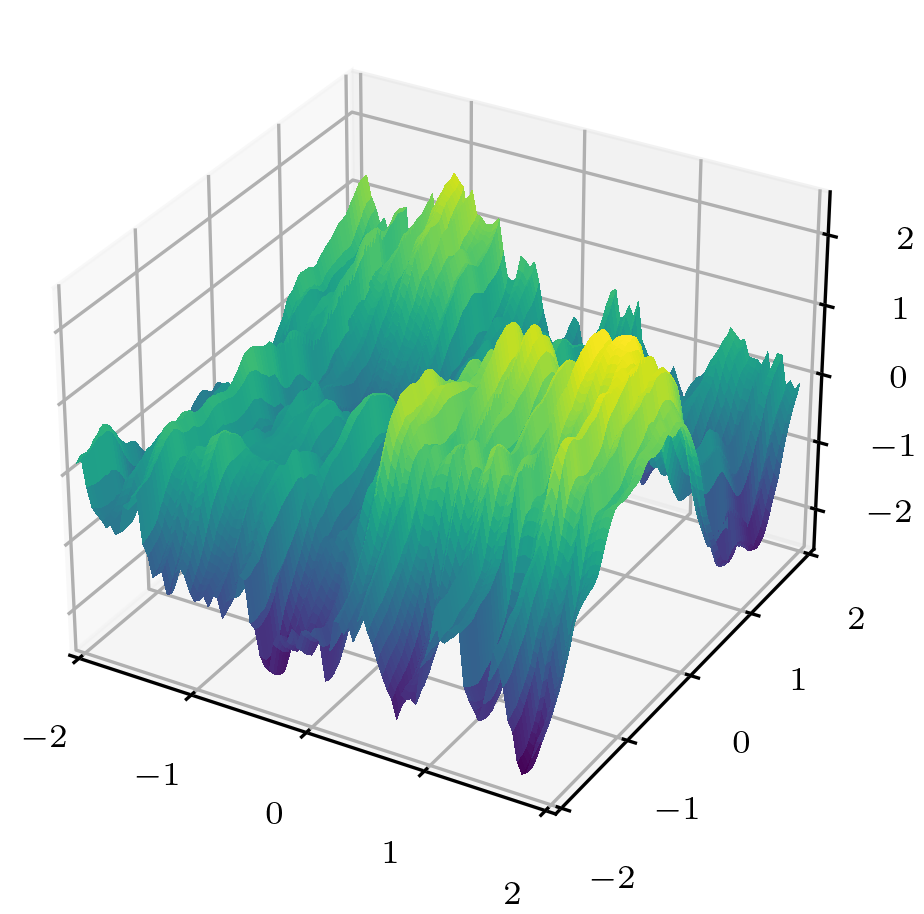

Tim Weiland, Marvin Pförtner, and Philipp Hennig2024Modeling real-world problems with partial differential equations (PDEs) is a prominent topic in scientific machine learning. Classic solvers for this task continue to play a central role, e.g. to generate training data for deep learning analogues. Any such numerical solution is subject to multiple sources of uncertainty, both from limited computational resources and limited data (including unknown parameters). Gaussian process analogues to classic PDE simulation methods have recently emerged as a framework to construct fully probabilistic estimates of all these types of uncertainty. So far, much of this work focused on theoretical foundations, and as such is not particularly data efficient or scalable. Here we propose a framework combining a discretization scheme based on the popular Finite Volume Method with complementary numerical linear algebra techniques. Practical experiments, including a spatiotemporal tsunami simulation, demonstrate substantially improved scaling behavior of this approach over previous collocation-based techniques.

@misc{Weiland2024GPFVM, author = {Weiland, Tim and Pf\"ortner, Marvin and Hennig, Philipp}, title = {Scaling up Probabilistic {PDE} Simulators with Structured Volumetric Information}, year = {2024}, archiveprefix = {arXiv}, eprint = {2406.05020}, primaryclass = {cs.LG}, doi = {10.48550/arxiv.2406.05020}, url = {https://arxiv.org/abs/2406.05020}, } -

Tobias Weber, Emilia Magnani, Marvin Pförtner, and Philipp HennigIn ICLR 2024 Workshop on AI4DifferentialEquations In Science, 2024

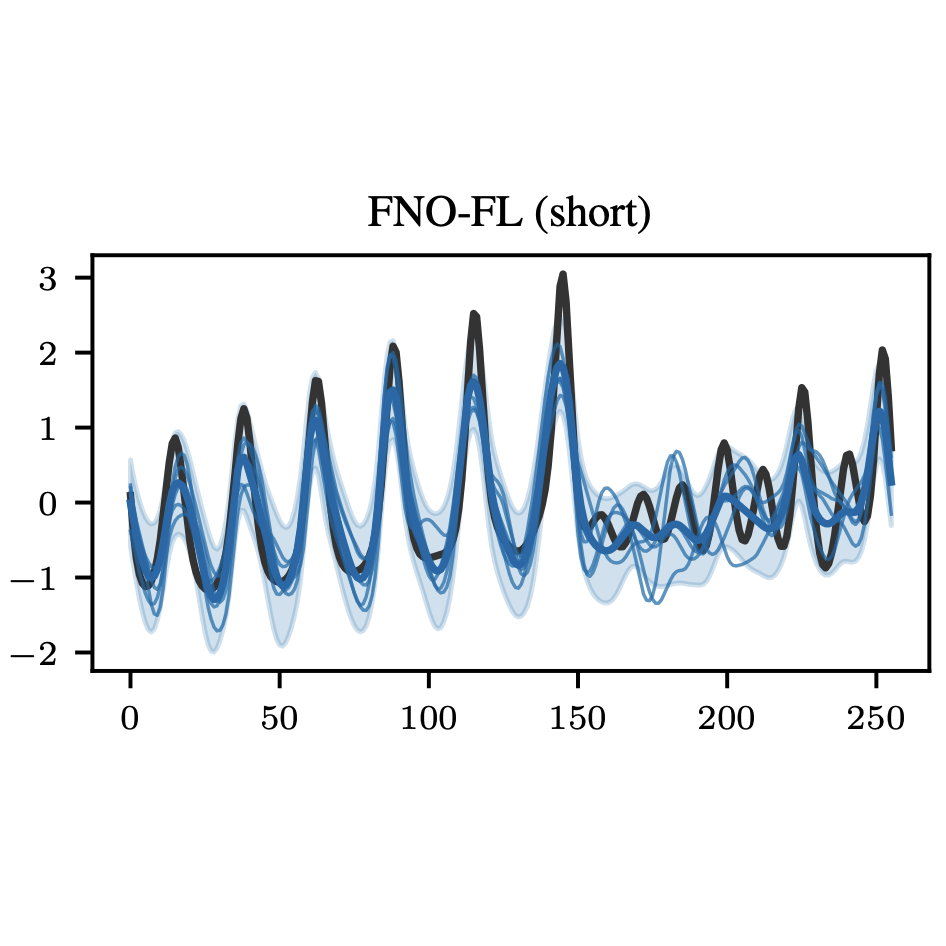

Tobias Weber, Emilia Magnani, Marvin Pförtner, and Philipp HennigIn ICLR 2024 Workshop on AI4DifferentialEquations In Science, 2024In medium-term weather forecasting, deep learning techniques have emerged as a strong alternative to classical numerical solvers for partial differential equations that describe the underlying physical system. While well-established deep learning models such as Fourier Neural Operators are effective at predicting future states of the system, extending these methods to provide ensemble predictions still poses a challenge. However, it is known that ensemble predictions are crucial in real-world applications such as weather, where local dynamics are not necessarily accounted for due to the coarse data resolution. In this paper, we explore different methods for generating ensemble predictions with Fourier Neural Operators trained on a simple one-dimensional PDE dataset: input perturbations and training for multiple outputs via a statistical loss function. Moreover, we formulate a new Laplace approximation for Fourier layers and show that it exhibits better uncertainty quantification for short training runs.

@inproceedings{Weber2024UQforFNOs, author = {Weber, Tobias and Magnani, Emilia and Pf\"ortner, Marvin and Hennig, Philipp}, title = {Uncertainty Quantification for {Fourier} Neural Operators}, booktitle = {ICLR 2024 Workshop on AI4DifferentialEquations In Science}, year = {2024}, url = {https://openreview.net/forum?id=knSgoNJcnV}, }

2023

-

2023

2023Gaussian processes (GPs) are the most common formalism for defining probability distributions over spaces of functions. While applications of GPs are myriad, a comprehensive understanding of GP sample paths, i.e. the function spaces over which they define a probability measure on, is lacking. In practice, GPs are not constructed through a probability measure, but instead through a mean function and a covariance kernel. In this paper we provide necessary and sufficient conditions on the covariance kernel for the sample paths of the corresponding GP to attain a given regularity. We use the framework of Hölder regularity as it grants us particularly straightforward conditions, which simplify further in the cases of stationary and isotropic GPs. We then demonstrate that our results allow for novel and unusually tight characterisations of the sample path regularities of the GPs commonly used in machine learning applications, such as the Matérn GPs.

@misc{DaCosta2023PathRegularityKernel, author = {Da Costa, Natha\"el and Pf\"ortner, Marvin and Da Costa, Lancelot and Hennig, Philipp}, title = {Sample Path Regularity of {Gaussian} Processes from the Covariance Kernel}, year = {2023}, archiveprefix = {arXiv}, eprint = {2312.14886}, primaryclass = {cs.LG}, doi = {10.48550/arxiv.2312.14886}, url = {https://arxiv.org/abs/2312.14886}, }

2022

-

2022

2022Linear partial differential equations (PDEs) are an important, widely applied class of mechanistic models, describing physical processes such as heat transfer, electromagnetism, and wave propagation. In practice, specialized numerical methods based on discretization are used to solve PDEs. They generally use an estimate of the unknown model parameters and, if available, physical measurements for initialization. Such solvers are often embedded into larger scientific models with a downstream application and thus error quantification plays a key role. However, by ignoring parameter and measurement uncertainty, classical PDE solvers may fail to produce consistent estimates of their inherent approximation error. In this work, we approach this problem in a principled fashion by interpreting solving linear PDEs as physics-informed Gaussian process (GP) regression. Our framework is based on a key generalization of the Gaussian process inference theorem to observations made via an arbitrary bounded linear operator. Crucially, this probabilistic viewpoint allows to (1) quantify the inherent discretization error; (2) propagate uncertainty about the model parameters to the solution; and (3) condition on noisy measurements. Demonstrating the strength of this formulation, we prove that it strictly generalizes methods of weighted residuals, a central class of PDE solvers including collocation, finite volume, pseudospectral, and (generalized) Galerkin methods such as finite element and spectral methods. This class can thus be directly equipped with a structured error estimate. In summary, our results enable the seamless integration of mechanistic models as modular building blocks into probabilistic models by blurring the boundaries between numerical analysis and Bayesian inference.

@misc{Pfoertner2022LinPDEGP, author = {Pf\"ortner, Marvin and Steinwart, Ingo and Hennig, Philipp and Wenger, Jonathan}, title = {Physics-Informed {Gaussian} Process Regression Generalizes Linear {PDE} Solvers}, year = {2022}, archiveprefix = {arXiv}, eprint = {2212.12474}, primaryclass = {cs.LG}, doi = {10.48550/arxiv.2212.12474}, url = {https://arxiv.org/abs/2212.12474}, } - In Advances in Neural Information Processing Systems, 2022

Gaussian processes scale prohibitively with the size of the dataset. In response, many approximation methods have been developed, which inevitably introduce approximation error. This additional source of uncertainty, due to limited computation, is entirely ignored when using the approximate posterior. Therefore in practice, GP models are often as much about the approximation method as they are about the data. Here, we develop a new class of methods that provides consistent estimation of the combined uncertainty arising from both the finite number of data observed and the finite amount of computation expended. The most common GP approximations map to an instance in this class, such as methods based on the Cholesky factorization, conjugate gradients, and inducing points. For any method in this class, we prove (i) convergence of its posterior mean in the associated RKHS, (ii) decomposability of its combined posterior covariance into mathematical and computational covariances, and (iii) that the combined variance is a tight worst-case bound for the squared error between the method’s posterior mean and the latent function. Finally, we empirically demonstrate the consequences of ignoring computational uncertainty and show how implicitly modeling it improves generalization performance on benchmark datasets.

@inproceedings{Wenger2022IterGP, author = {Wenger, Jonathan and Pleiss, Geoff and Pf\"ortner, Marvin and Hennig, Philipp and Cunningham, John P.}, title = {Posterior and Computational Uncertainty in {Gaussian} Processes}, editor = {Koyejo, S. and Mohamed, S. and Agarwal, A. and Belgrave, D. and Cho, K. and Oh, A.}, booktitle = {Advances in Neural Information Processing Systems}, volume = {35}, pages = {10876--10890}, publisher = {Curran Associates, Inc.}, year = {2022}, archiveprefix = {arXiv}, eprint = {2205.15449}, primaryclass = {cs.LG}, doi = {10.48550/arxiv.2205.15449}, url = {https://proceedings.neurips.cc/paper_files/paper/2022/hash/4683beb6bab325650db13afd05d1a14a-Abstract-Conference.html}, }

2021

-

Jonathan Wenger, Nicholas Krämer, Marvin Pförtner, Jonathan Schmidt, Nathanael Bosch, Nina Effenberger, Johannes Zenn, Alexandra Gessner, Toni Karvonen, François-Xavier Briol, Maren Mahsereci, and Philipp Hennig2021

Jonathan Wenger, Nicholas Krämer, Marvin Pförtner, Jonathan Schmidt, Nathanael Bosch, Nina Effenberger, Johannes Zenn, Alexandra Gessner, Toni Karvonen, François-Xavier Briol, Maren Mahsereci, and Philipp Hennig2021Probabilistic numerical methods (PNMs) solve numerical problems via probabilistic inference. They have been developed for linear algebra, optimization, integration and differential equation simulation. PNMs naturally incorporate prior information about a problem and quantify uncertainty due to finite computational resources as well as stochastic input. In this paper, we present ProbNum: a Python library providing state-of-the-art probabilistic numerical solvers. ProbNum enables custom composition of PNMs for specific problem classes via a modular design as well as wrappers for off-the-shelf use. Tutorials, documentation, developer guides and benchmarks are available online at http://www.probnum.org/.

@misc{Wenger2021ProbNum, author = {Wenger, Jonathan and Kr\"amer, Nicholas and Pf\"ortner, Marvin and Schmidt, Jonathan and Bosch, Nathanael and Effenberger, Nina and Zenn, Johannes and Gessner, Alexandra and Karvonen, Toni and Briol, Fran\c{c}ois-Xavier and Mahsereci, Maren and Hennig, Philipp}, title = {{ProbNum}: Probabilistic Numerics in {Python}}, year = {2021}, archiveprefix = {arXiv}, eprint = {2112.02100}, primaryclass = {cs.MS}, doi = {10.48550/arxiv.2112.02100}, url = {https://arxiv.org/abs/2112.02100}, }